Microservices

Before microservices, software systems were deployed as a whole, with clients accessing the system through APIs. The business logic modules handled requests and accessed the database to store or update objects. While this architecture is similar to the distributed systems we've discussed, it is different in that the entire application is built and deployed as a single service, also known as a monolithic application.

Monolithic applications have several advantages, such as being easy to understand and automating deployment, testing, and monitoring. Scaling up hardware is the simplest way to scale the system, and it is possible to scale out if needed. However, monolithic applications have significant drawbacks, such as becoming too complicated and challenging to change or test as more features are added. Scaling out one part of the system requires scaling out the entire system, which increases costs.

By splitting the monolithic application into several smaller, self-contained microservices, each with its own database if needed, the process of adding more features becomes easier. Teams are not afraid to roll out new changes, and each team can choose its own preferred technology stack. Scaling out one microservice doesn't affect the others, which reduces costs.

However, there are trade-offs to consider when splitting features into microservices. For example:

- Extra networking communications between microservices can incur more latencies

- When each microservice has its own database, data duplication is unavoidable. Still, the cost of data duplication is small compared to the cost of refactoring multiple microservices

- Additionally, multiple microservices expose different IP addresses and ports that change frequently, but API gateway or Backend for Frontend pattern (opens in a new tab) can be used to redirect clients' requests. The API gateway usually has low latency, provides authentication, authorization, rate limiting, caching, and supports monitoring, among other benefits.

Despite the potential trade-offs, microservices have many advantages over monolithic applications, making them an increasingly popular choice for software architecture. By breaking down complex systems into smaller, more manageable pieces, teams can develop, test, and deploy faster, leading to better customer experiences and faster time to market.

Principles of microservices

7 principles of microservices are the guidance to design and develop a microservices-based distributed system.

| # | Principle | Description |

|---|---|---|

| 1 | Business-domain-centered | Microservices should be defined based on a specific business domain, with consideration given to communication and performance trade-offs between microservices |

| 2 | Highly observable | Observability is crucial in distributed systems, allowing for monitoring of latencies, errors, logs, and other metrics to ensure expected behaviors |

| 3 | Implementation details should be hidden | Microservices should be treated as black boxes with hidden implementation details, enabling teams to use their preferred technology stacks |

| 4 | Decentralize processing (*) | The processing of requests that involve downstream microservices should be decentralized to prevent bottlenecks and increase system resilience |

| 5 | Failure isolation | The failure of a single microservice should not affect the functionality of the entire system. Strategies to achieve this include proper error handling, fallbacks, and retries |

| 6 | Independent deployment | Each microservice should be able to be deployed independently of the others, giving teams the flexibility to deploy and update their services as needed |

| 7 | Automation-focused culture | Automation is crucial for developing and deploying microservices, enabling the creation of more robust systems that can handle changes more easily |

(*) Decentralize processing

There are two primary approaches for handling requests that call multiple microservices: centralized orchestration and peer-to-peer choreography.

In the orchestration approach, the workflow logic is managed within a single microservice. This microservice communicates with other microservices and sends results back to clients. On the other hand, in the choreography approach, multiple microservices talk directly to each other on demand.

As with any architectural decision, each approach comes with its own set of trade-offs. For example, monitoring requests in orchestration is much simpler than in choreography. However, orchestration can create a single point of failure.

If you're interested in learning more about these two approaches, you can check out this article (opens in a new tab).

Resilience in microservices

This section discusses solutions to address the issue of cascading failures in microservices-based architecture, which is critical for handling system failures at inconvenient moments.

Cascading failures

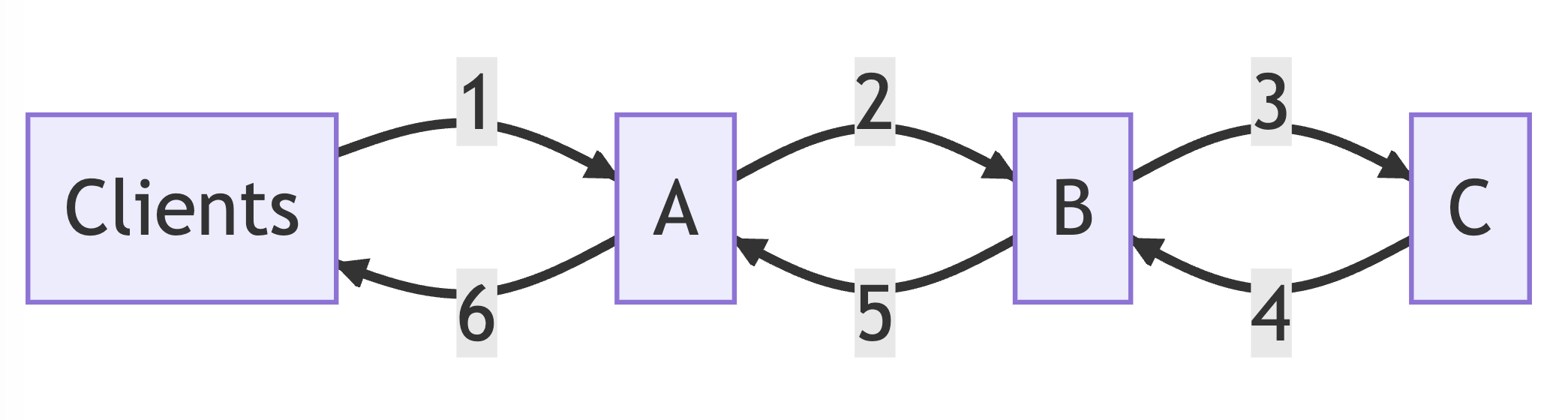

Assuming a request needs to call services A, B, and C as follows. Due to some reasons, C is unable to respond, which causes requests to A and B to fail.

As traffic increases, C will be overwhelmed and unable to recover quickly. Retrying repeatedly is not a viable solution since it creates more load on C and overload situations may continue for many seconds or minutes. Exponential backoff retries (opens in a new tab) can help reduce overload, but the latency still increases. There are three primary solutions for cascading failures, including the Fail Fast pattern, the Circuit Breaker pattern, and the Bulkhead pattern.

Fail fast pattern

To reduce long response times, the Fail Fast pattern is used. There are two main approaches:

- Respond with errors when the request reaches a predefined timeout (e.g., P99 value). These releases used resources

- Respond with a 503 error when the traffic load reaches a predefined threshold. This is referred to as rate limiting or throttling

Circuit breaker pattern

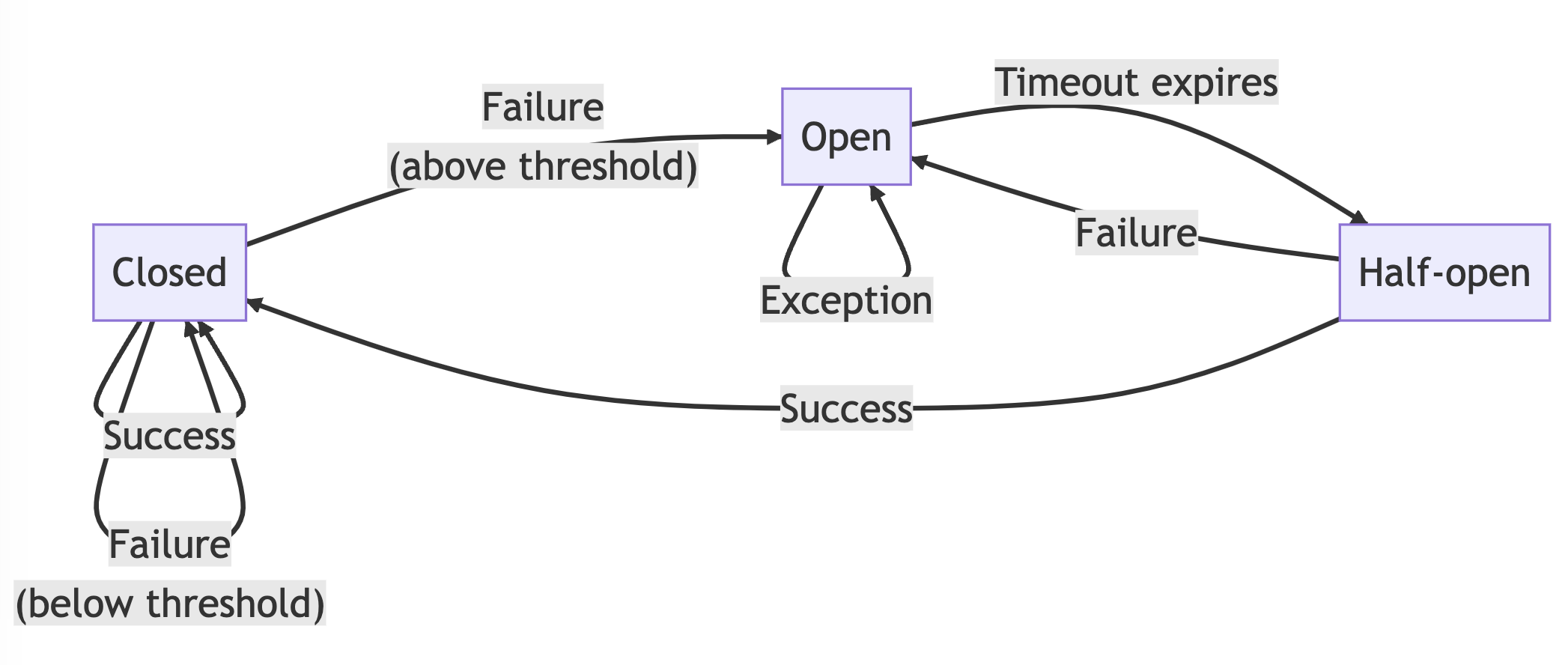

The Circuit Breaker pattern is a solution to reduce long response times that is quite similar to exponential backoff retries. When the response error rate reaches a threshold (e.g., 10%), the circuit breaker rejects all requests by setting the state to OPEN After a timeout period, the circuit breaker moves to HALF-OPEN state and allows requests to pass into the service. In the HALF-OPEN state, if requests still fail, the timeout period is reset, and the circuit breaker moves back to the OPEN state. If requests succeed, the circuit breaker moves to the CLOSED state and allows requests to be processed as normal.

Bulkhead pattern

The Bulkhead pattern is a strategy to limit resources used by multiple features in a service. For example:

- In a service with two endpoints, A and B, the service uses a shared thread pool to process requests sent to these two endpoints

- If endpoint A uses all the threads in the thread pool, there will be no threads available to process requests sent to endpoint B

- The Bulkhead pattern helps to limit the number of threads used by endpoint A (e.g., 100 threads). This ensures resource availability to process requests sent to endpoint B with an acceptable response time

Keynotes

- Microservices are a software architectural style where an application is composed of many independent services that communicate with each other over a network

- There are seven principles when designing and developing a microservices-based distributed system

- There are two basic approaches to processing requests that call multiple microservices: centralized orchestration and peer-to-peer choreography

- Cascading failures can occur in microservices when one microservice's failure causes others to fail as well

- There are three main solutions to cascading failures: the fail-fast pattern, the circuit breaker pattern, and the bulkhead pattern.