Event-driven architecture

Events are packets of information that are utilized by different parts of a system. For instance:

- When user A receives chat messages from user B, an event is generated to send a push notification to user A

- When the batch inference service completes the prediction process, an event is generated to notify the data scientist

- A data drift detector that runs periodically generates an event containing data drift information

- Events are typically published to a messaging system, and interested services can register to receive and process them

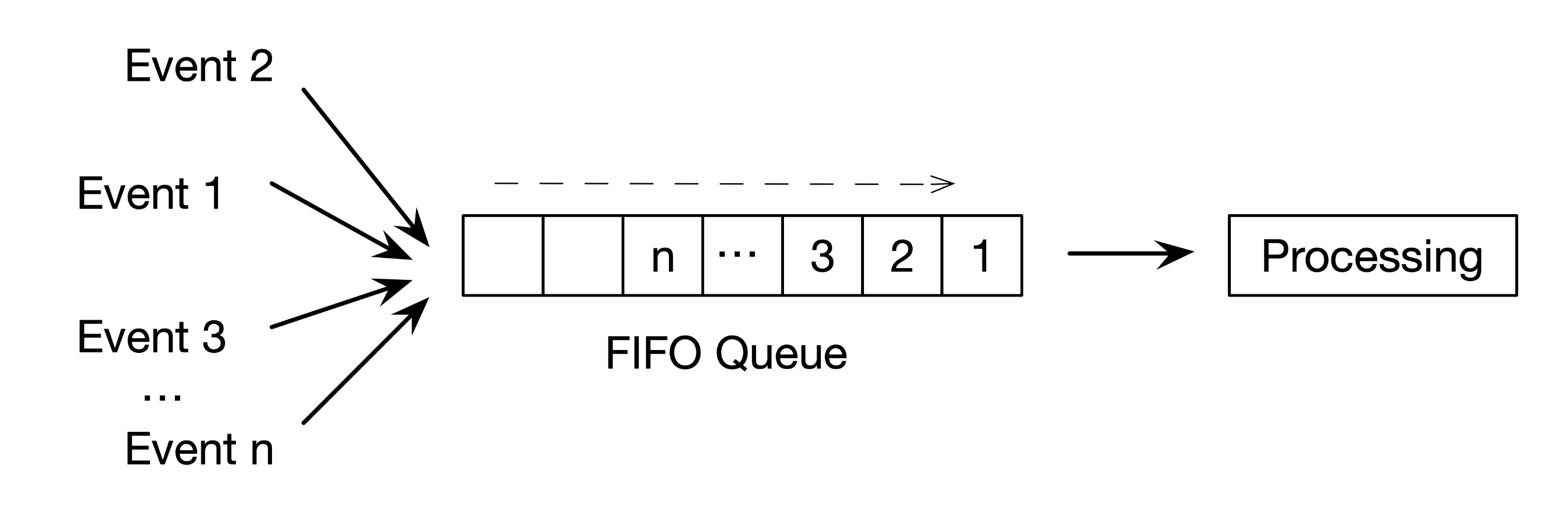

In asynchronous messaging systems that use First-In, First-Out (FIFO) queues, such as RabbitMQ, after all subscribers have consumed an event, the event is removed from the broker. In contrast, some messaging systems use an event log to permanently store events. In an event log, records are appended to the end of the log, and each log entry is assigned a unique entry number, which helps determine the order of events.

There are several advantages of using an event log data structure:

- A new consumer can access the entire history of events, as the log stores permanent and immutable records of events

- New event-processing logic can be executed on the complete log to add new features or fix bugs

- Services can rely on the event log to reconstruct their last state in case of crashes, similar to a transaction log in databases

However, one of the disadvantages of using an event log is the issue of deleting events. There are several solutions, such as:

- Attaching a time to live (TTL) to a log entry, where log entries are deleted after a specified time

- Configuring topics to retain the most recent entry for a given event key. If an existing log entry needs to be deleted, a new entry with the same key and a null value can be written. This technique is known as compacted topics in Apache Kafka (opens in a new tab)

Stream processing introduction

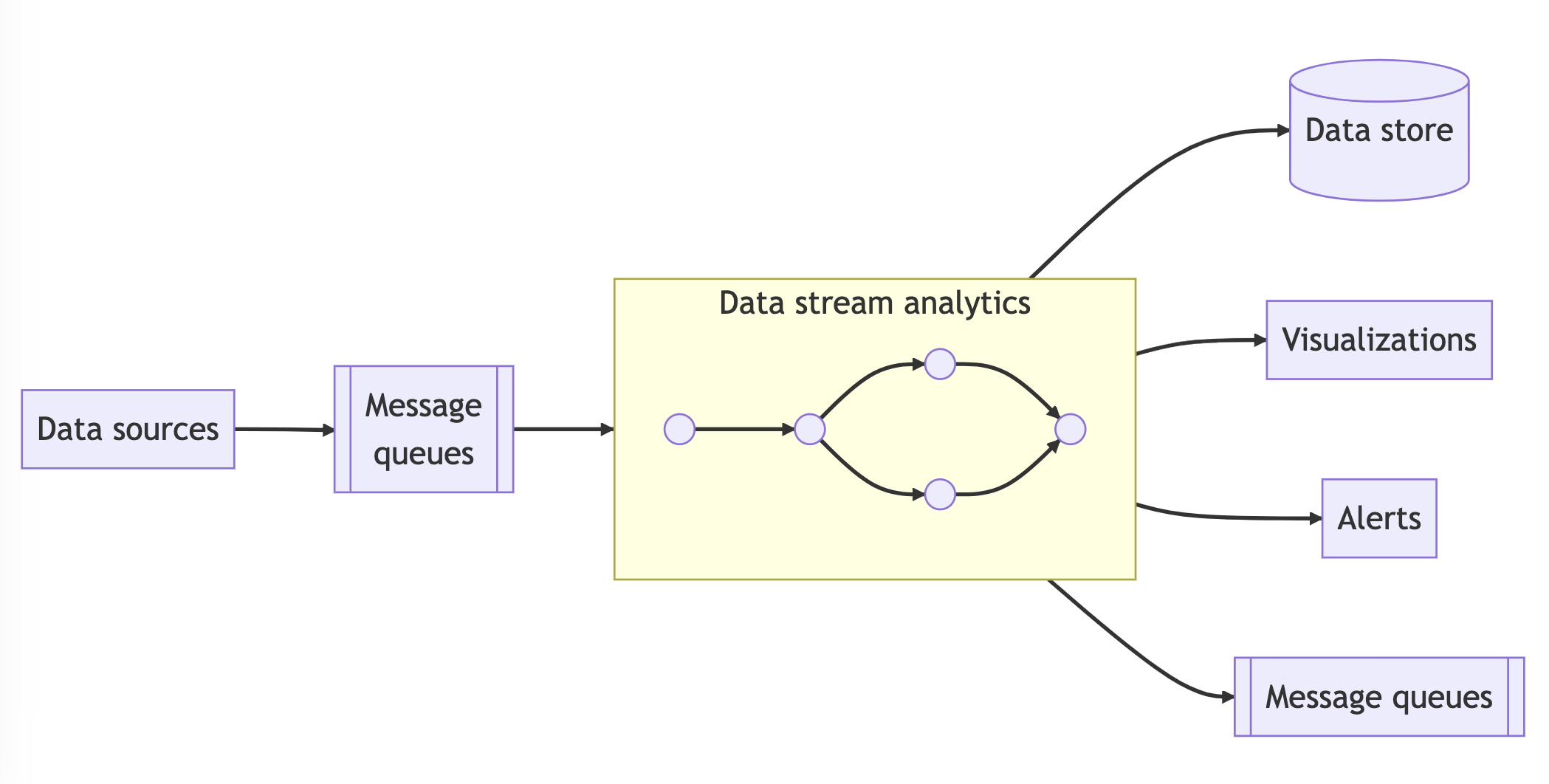

Stream processing systems aim to process data streams in memory without persisting the data, also known as data-in-motion or real-time analytics.

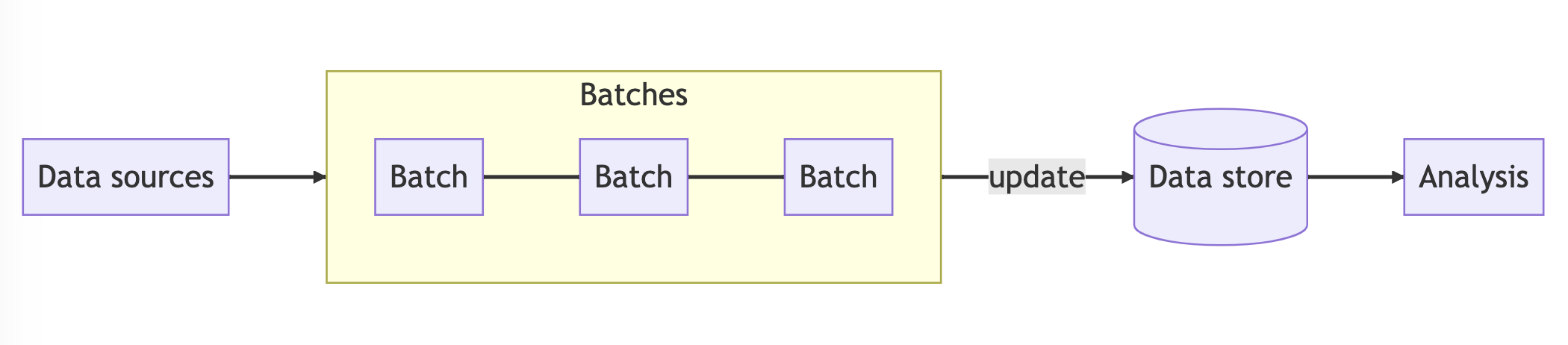

In batch processing systems, raw data is accumulated into files, and a service is run periodically to perform an extract, transform, load (ETL) process to aggregate and transform the data that can be inserted into storage. This process can take minutes to hours.

Some use cases, such as fraud detection, require real-time analytics, which requires the use of stream processing. The definition of real-time may vary depending on the application, but it generally means processing data in less than a second to a few seconds.

The table below provides a comparison between stream processing and batch processing.

| Batch processing | Stream processing | |

|---|---|---|

| Batch size | Millions or billions of data entries | Single events to microbatches of thousands to tens of thousands of data entries |

| Latency | Minutes to hours | Subseconds to seconds |

| Analytics | Complex, involving both new and existing data | Simple, involving newly arrived data within a specified time window |

Stream processing platforms

A typical stream processing platform comprises of underlying architecture and mechanisms, which can be categorized into two types of stream processing applications.

The first type of stream processing application involves processing individual events independently without requiring context or state of each event. The results are then written to databases or asynchronous messaging queues. An example of this is processing the stream of heartbeat data from a smartwatch. This type of application is referred to as stateless streaming applications.

The second type of stream processing application requires maintaining state that persists across the processing of data objects in the stream. For instance, a system that analyzes high-demand products needs to keep track of the number of items sold for each product in the last hour. This type of application is referred to as stateful streaming applications.

Keynotes

- Event-driven architecture is a design pattern that focuses on events, rather than state changes, to trigger actions

- Stream processing platforms are software frameworks that enable the processing of continuous streams of data in real-time

- Stateless streaming applications process individual events without requiring context or state of each event

- Stateful streaming applications require state that persists across the processing of data objects in the stream

- Event-driven architectures can use stream processing platforms to process and analyze data in real-time